Where on earth have you been?

Sometimes, you just have to remember to take a step back every once in a while. It's good for you, and helps you come back with a fresh mindset.

I've been taking a little break, sometimes this whole "thing" starts to feel like a bit of an obsession, or even like a second job at times. I really wasn't a huge fan of that idea. Things were picking up again, and I figured I deserved a little break.

However, that didn't stop me from adding, working on, and improving the lab over the last couple of months here and there.

Yet another VMHost

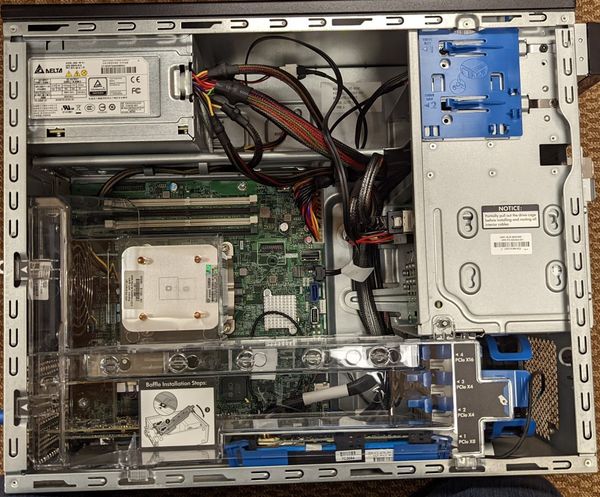

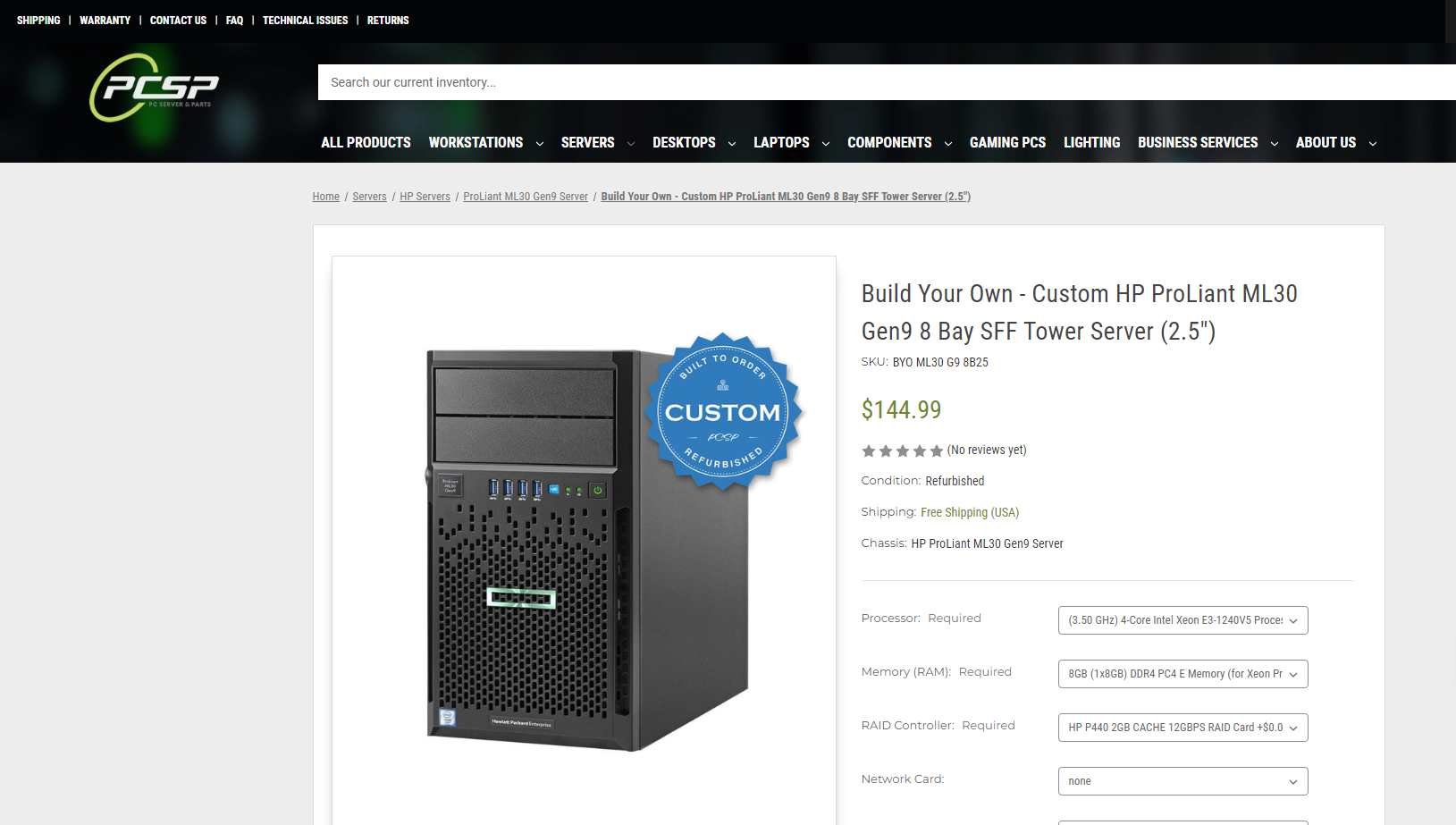

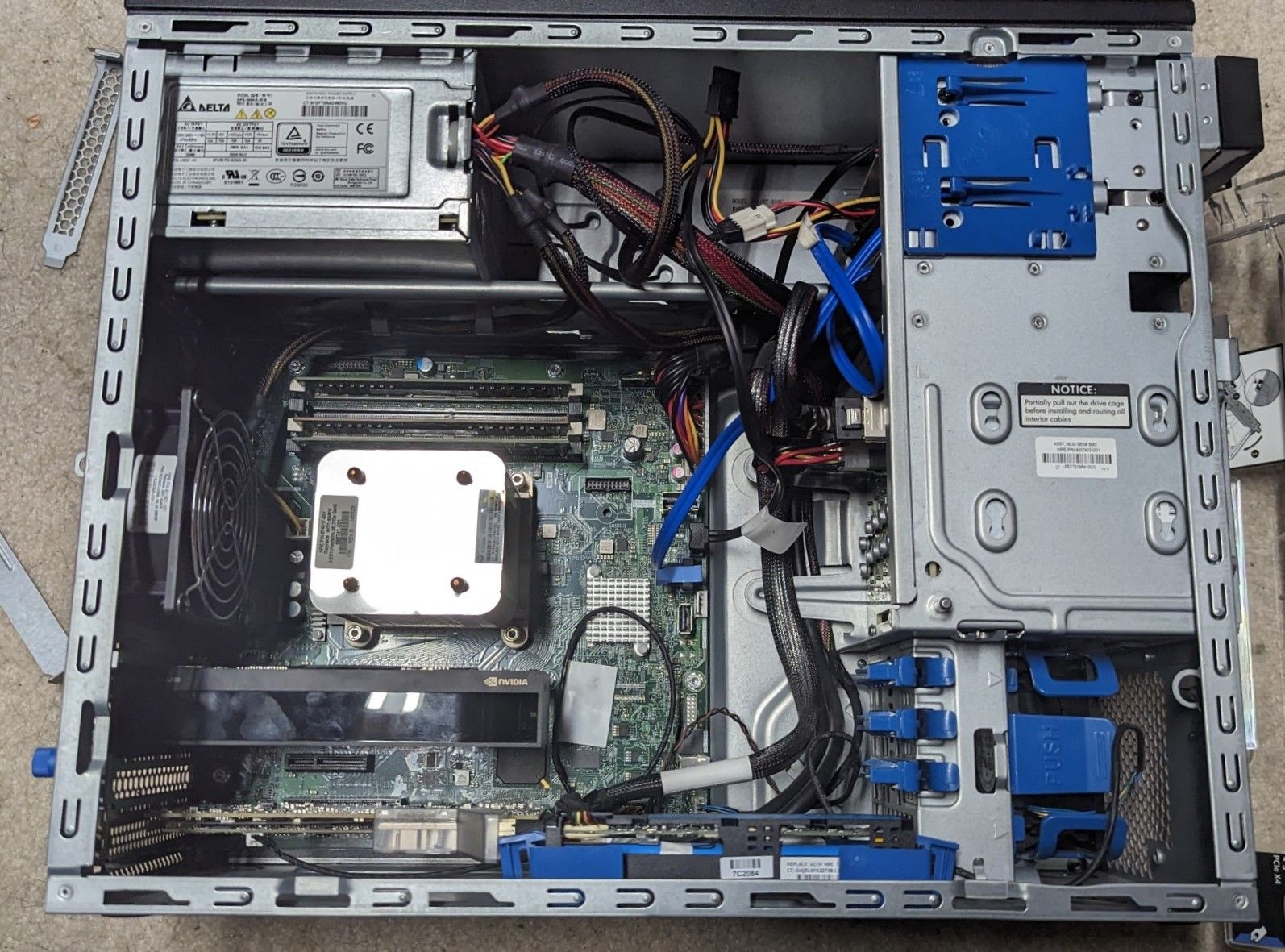

I decided that it was time to purchase yet another VMHost, and I went with another ML30G9. They're absolute powerhouses with plenty of PCIe slots, 4DIMMs, and all the features I wanted for less than $200 shipped.

With this one, I went all-in, installing 3x1TB PCIe4x to NVMe cards and 36GB of DDR4 ECC, at the cost of removing the built-in RAID card and accessories. I figured, since I was using NVMe, I wouldn't need the front 8x2.5" cage.

I added this host to my cluster, and migrated my game servers over, and au-voila, I'd say a job well done.

I had initially intended to run my jump box on this host, but since things didn't quite line up, I decided to take an alternative route. But, fast IO is always nice for high-intensity servers like Minecraft which load and write chunks constantly.

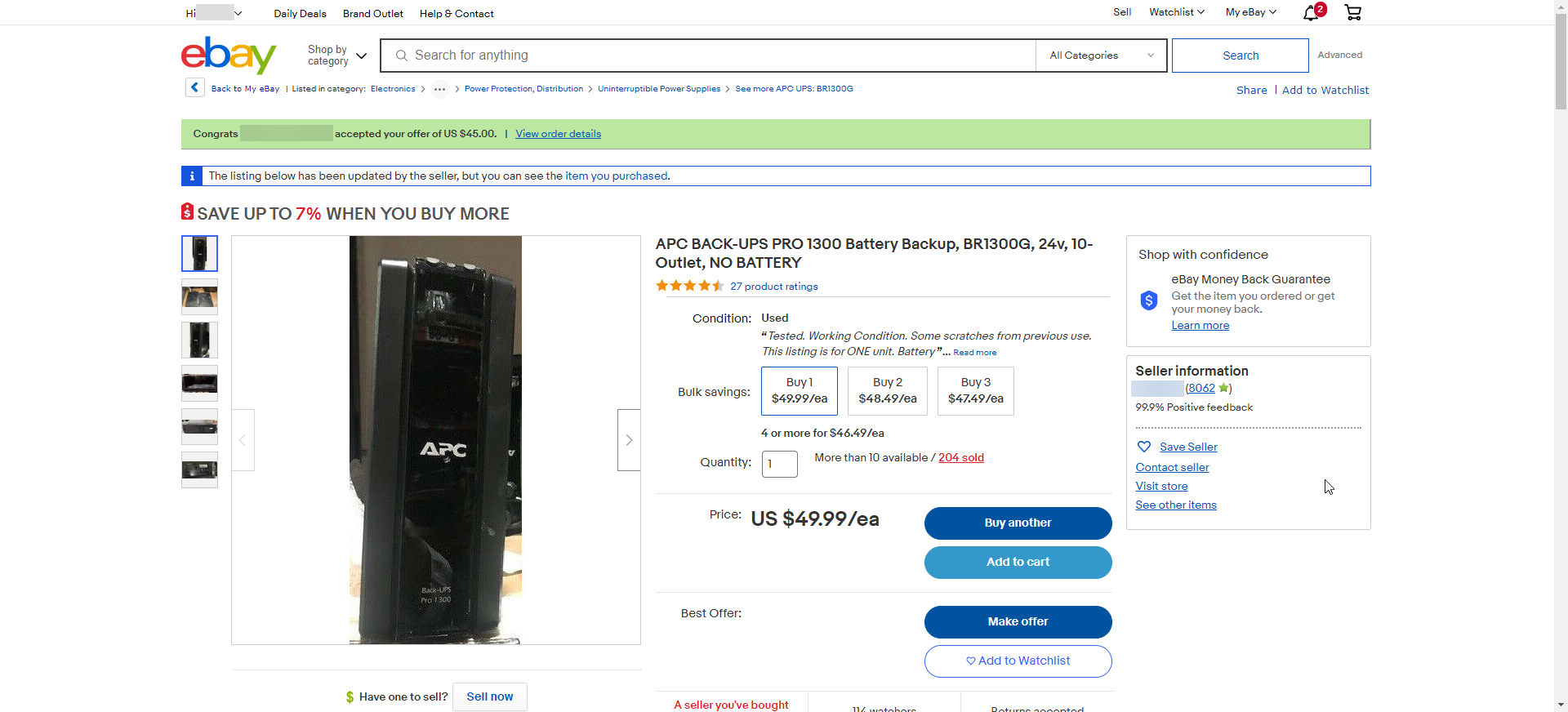

While adding another host, I also realized that I had maxed out my 750w UPS. At the time I had put my Synology NAS, a PoE switch, both of my ec200a's and my ML30G9 on it, and the time slowly diminished from 60+ minutes to 25ish minutes.

To "split the load" I purchased a 1000w APC UPS off ebay for $45 without a battery, and a battery for $50 off Amazon, effectively saving $100+ by building it myself. It's wild how doing things yourself can save you quite a bit of money.

I put both ML30G9's on the 1000w UPS, and now I'm seeing an even time of ~30-45 minutes (depending on load) on both UPSs, which is plenty of time to shut things down.

The woes of GPU Passthrough

I've been a longtime watcher of CraftComputing, he has some excellent videos on using GPUs in the lab for various tasks, whether it be multi-user virtual-gaming rigs or transcoding for Plex he finds a way to make use of GPUs in his lab.

I wanted to do something similar with my lab, I have a VM I call my "jump-box" that I use when I need a computer and don't have the necessary hardware readily available.

Previously, I had hosted it on one of my ec200a's attached to my SAN, but I noticed that a desktop hosted on one of those isn't exactly "responsive" nor practical, and they're pretty limited in terms of expansion options.

Now, this VM has a pretty solid setup, 16GB of RAM, 4c/4t, and 256GB of SSD space, but it doesn't really have any "video render" capabilities. The CPU I'm using (e3-1240v5) doesn't have onboard graphics, so that's off the table.

I did some research on the topic, and it really boils down to these 3 things:

- The GPU needs to be <75w, I don't have any 6/8pin connectors available.

- It's gotta be slim, and short, the shroud in my tower-server is very restrictive.

- It has to be capable of 1080p gaming. No wimpy GT710's please.

With these ideas in place, I was able to narrow it down to 2 card(s).

A) The GTX 1650 Super (Decent, but not a Quadro, so complicated)

B) The RTX A2000 (Superb, but expensive, and rare)

I compared my options, and seeing as both were pretty close in price on the second hand market, I went with the superior NVIDIA RTX A2000.

After determining what card I wanted, I had to figure out how GPU passthrough works, as I've never really worked with it before.

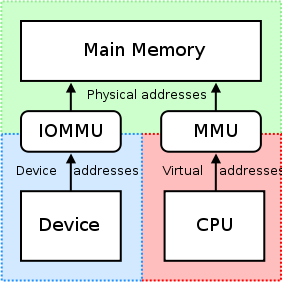

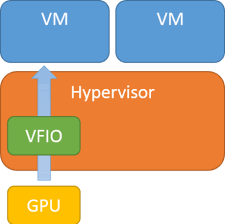

Now, I keep saying GPU passthrough, but it's really PCIe passthrough, specifically IOMMU, or SR-IOV.

Proxmox uses VFIO to pass through GPUs to a VM, so there was some configuration involved (and of course, trial and error).

But after that, I was able to get the GPU to be detected in my VM, Not too shabby!

The next task was to get video out from it to my laptop, or another machine. I explored my options, RDP obviously wasn't going to work, CraftComputing did showcase Parsec quite a bit, so I figured I'd give that shot.

Parsec was designed for remote workers to access latency sensitive, GPU-accelerated applications, like CAD or Unity, but we all know the obvious usecase, gaming.

After some tweaking, I was able to get it stable enough to play MW2 (2009) and Minecraft remotely, not too shabby!

I'm not sure if I'm going to keep this as my configuration, the e3-1240v5 is a bit of a bottleneck compared to the GPU, but down the road, I may be switching it a Ryzen-based setup.

Citrix XenApp/XenDesktop

Now this, this is a long story, so grab another cup of whatever you're drinking because this might take a while.

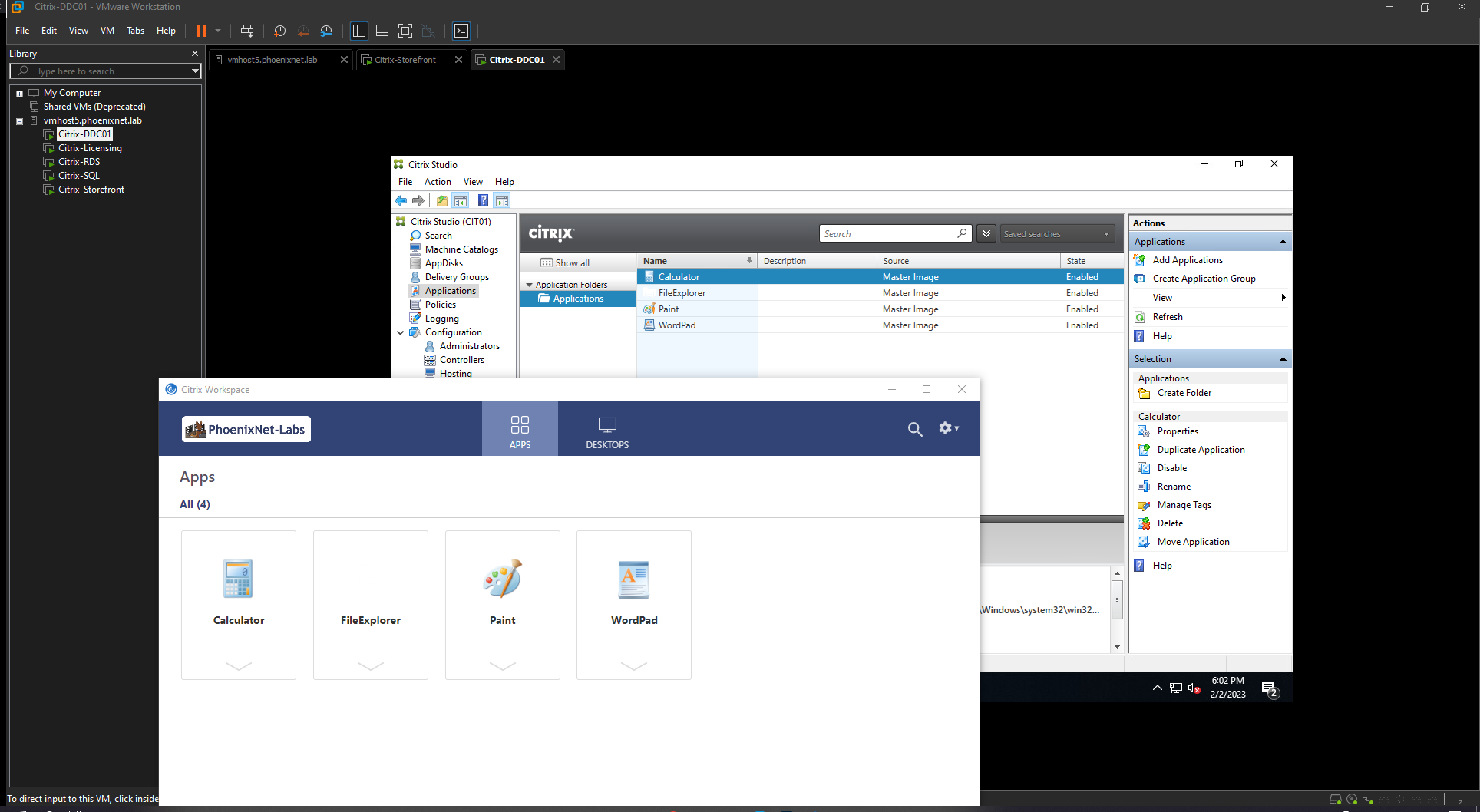

At work, we run Citrix XenApp to service our remote offices, and employees that work remotely. That way, they can access the applications they use daily from anywhere they might be.

Now, I don't directly interface with it, as I'm just a tech, but I do have the curiosity to learn how it all works. Which lead to this project.

The first attempt:

The plan was to deploy Citrix XenApp and XenDesktop 1715 LTSR, as my friend had suggested, it should "just work" as he proclaimed.

It in-fact, did not "just work", like at all.

I installed the license server, OK

I installed the SQL Express 2017 server OK

I installed the DDC- wait nope it failed. I kept fighting the SQL server to find out that in the guide provided to me they were using SQL (NOT EXPRESS) server 2016, and by default, express turns off a handful of features that are required.

Firewall Rules

TCP/IP / Pipes are disabled

After turning them back on, I was able to get the DDC to build the database it needed, and start up.

I deployed the RDS host, I purchase some CALs on a whim for giggles, figured why not, $35 for 50 CALS is pretty good. And then I FUBAR'd the VM of course.

So I had to fight with Microsoft support over the phone to get my CALs rearmed on the rebuilt VM.

After I got my CALs rearmed, the RDS host was OK

Then, I setup my DDC to serve applications, and the desktop from the RDS host

And finally, I went to setup the storefront- NOPE issues again.

The certificate import process for Citrix storefront is NOT as easy as it should be. Although, I'm not using Citrix Gateway / ADC, as I feel it's overkill for what I'm doing in my setup.

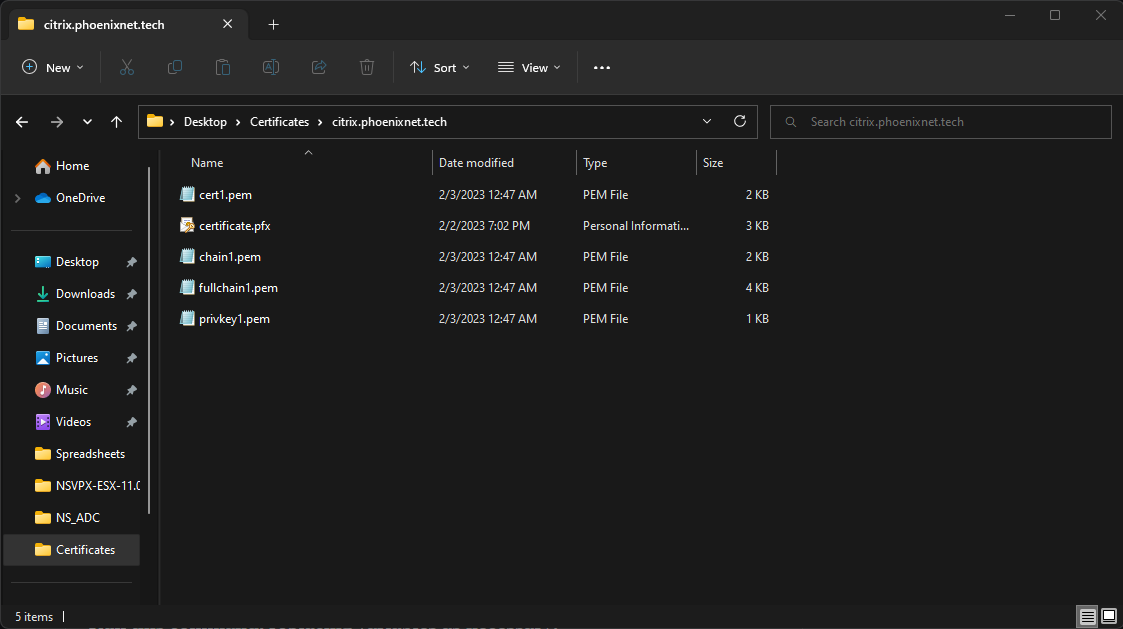

Instead, I'm pulling certificates from NPM (Nginx Proxy Manager) via Let's Encrypt, which is a free certificate issuing service. They need to be converted from .pem format to .pfx to be imported into Windows Server Internet Information Services.

This isn't exactly a "simple task" par-say, you'll have to convert the .pem certificates and keys to .pfx using OpenSSL and some command line wizardry.

Here's my little "cheat-sheet" that I use whenever it comes time to renew my certs:

Run this command, replacing variables as necessary:

OpenSSL pkcs12 -certpbe PBE-SHA1-3DES -keypbe PBE-SHA1-3DES -nomac -inkey {path/to/key.pem} -in {path/to/cert.cer} -export -out {path/to/cert.pfx}

After importing the .pfx, and binding it to the site in IIS, I'm able to visit the storefront page and open applications / desktops.

Annnnd then, I realized that this was STUPIDLY out of date, and wildly insecure to be running on the public internet.

The second attempt:

After I felt accomplished, I decided to just tear down the whole environment, as I really don't have a use for it. It was more of a "I can say I've done this" type deal.

However, my company decided to update to 1903 LTSR, and I figured it'd be worth the experience to do it again.

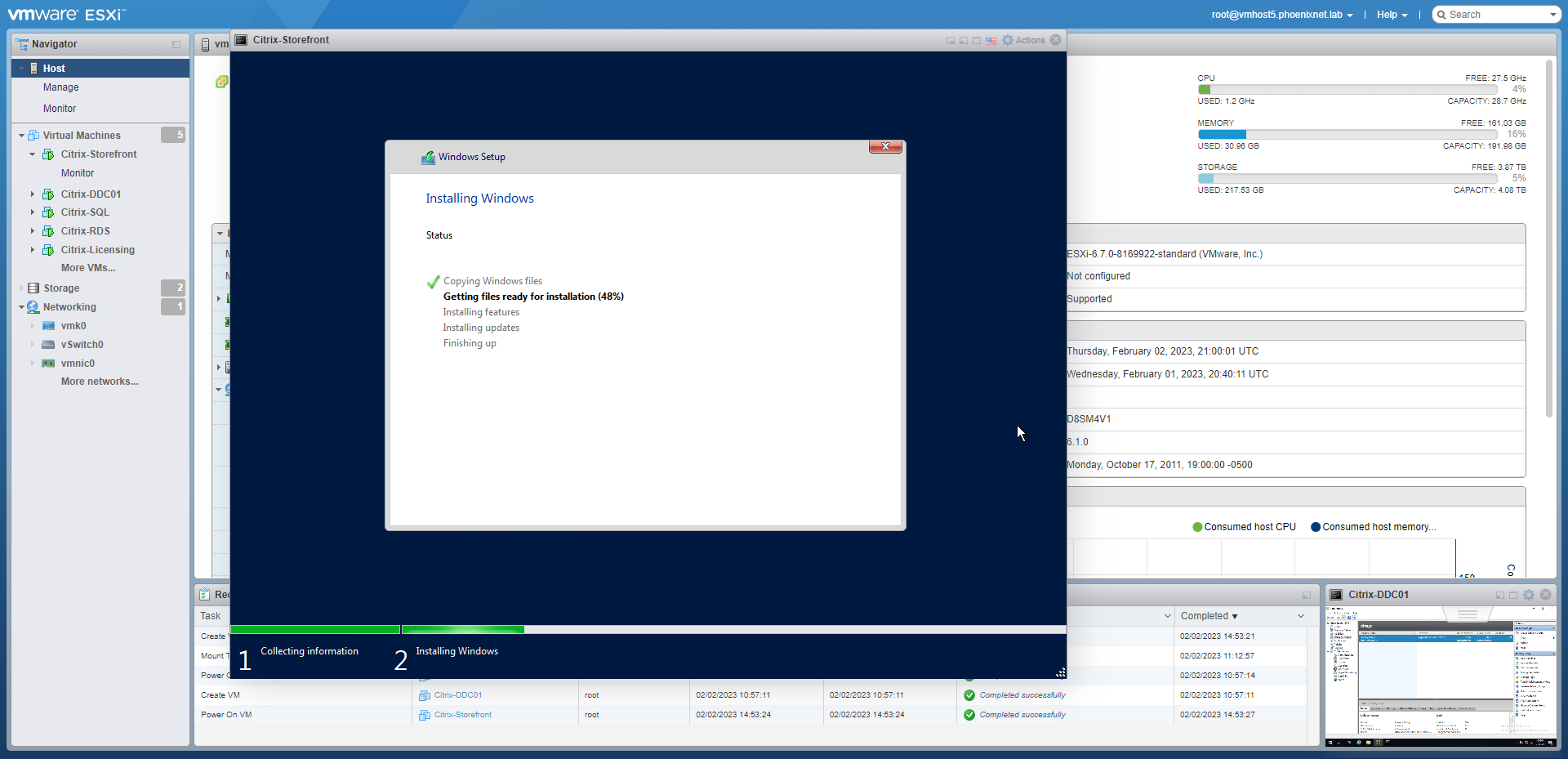

So, following the same formula, although, this time I had a dedicated host (We'll talk about that later) with enough resources to space things out a bit.

This time around, I deployed SQL Server 2019 Express, and had the same issues as last time (no surprise) but was able to correct them.

After that, I deployed my DDC, and to keep things separated, a standalone RDS VM, that pulls licensing from my deployment RDS VM on another host.

I had a little fun with this deployment, customizing the storefront UI, and messing around with features I hadn't used on the last attempt.

I was curious about remote machine access, as we use it at work with our sensitive departments. They can't take home their desktops, but the data is sensitive enough where we can't just let them take it home. Instead, we allow them to use Citrix, and RDP into their machines.

Pretty cool stuff, it's like using a RDS host, but with single-user licensing only, and it's literally their desktop.

Overall, I'd say it was a fun experience, and would recommend it to anyone who wants to work with Citrix XenApp/Desktop.

The "playground" box

I call this host a "playground" for learning whatever I want because I literally don't care what happens to it.

I had a friend "donate" his T710 to me, I was over at his place and saw it collecting dust, and figured I'd see if he wanted to part with it. He gladly obliged to part with it for the grand total of "get it out of my house".

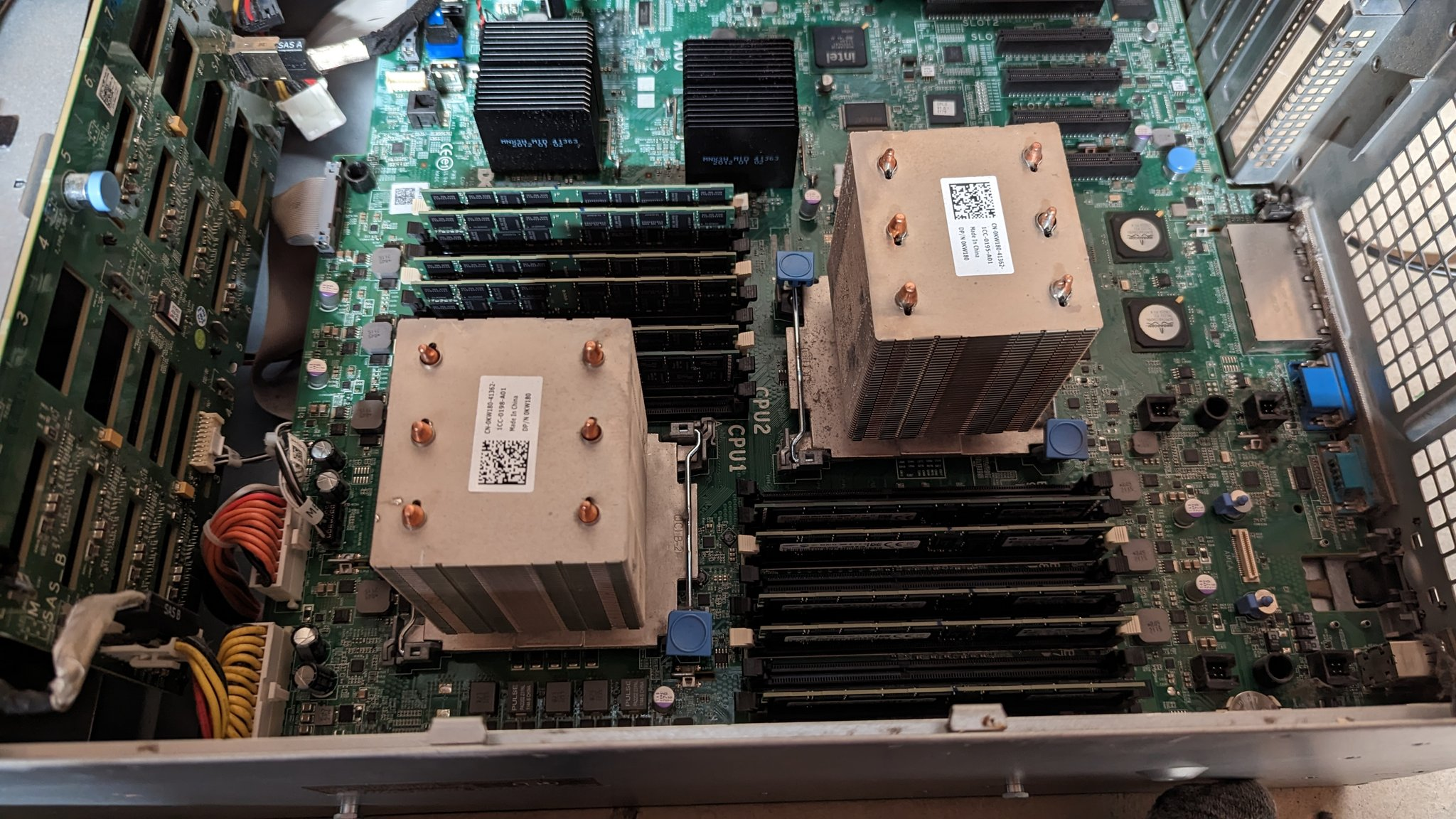

First off, it's an absolute unit, at a heafty 84 pounds when fully-loaded. Pretty sure I pulled a muscle pulling it out of my hatchback, but that's the price you pay. It's "fully loaded" as configured with 192GB of DDR3 ECC RAM, dual E5640's and dual 1100w power supplies.

I personally upgraded the iDRAC express to iDRAC enterprise with a $11 module off eBay, as well as swapped out the 540GB drives for 3x2tB Constellation ES.2 drives I got off eBay forever-ago for an old project that flopped, and slapped in an SSD for Proxmox.

I had some "complications" trying to figure out how vSwitching works on Proxmox, so I ultimately decided to switch to VMware ESXi. We use it at work, and the hardware was right, so I don't have any complaints.

After getting it all setup, I made a vLAN for it in UniFi Network, and plugged it in to my network. Virtual networking in ESXi is so much easier, just turn on Promiscuous networking on the vSwitch, and tag the vLAN and off you go.

I have yet to max it out, I ran that Citrix XenApp/XenDesktop lab in it, no sweat. I might toss GNS3 on it if I'm feeling adventurous, but I'm thinking I might have other plans for it down the road...

Until next time!