We're growing again!

I cannot believe I'm writing this, but I decided it was time to kick things up a notch. Like, all the way. We're scaling up!

After doing some selling here and there, some strategizing and research, I decided it was time to get-real and get some powerful hardware.

I've always wanted to have / use 10GB in my lab, it's a cool technology that absolutely is the future. Maybe not for direct-internet communication, but inter-communication between server(s) and computers to servers.

I also have been really itching for some real "firepower" in the realm of high-density memory and core counts. Let's just say I decided to go wild.

Vertical Scaling

In my previous post, I had mentioned that I had sold off both of my old HPE ec200a's as they didn't have much kick. Well, I also managed to horizontally scale out that first Hyve server and decided to buy another.

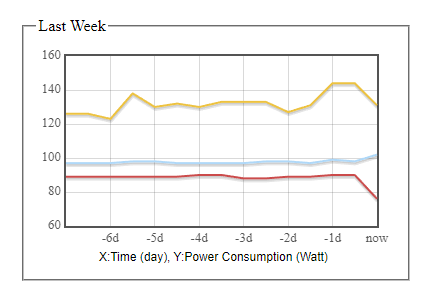

Ideally, both at maximum have 128GB of RAM, and dual e5-2650v2's for a comfortable avg 90-110w power draw. Growing from that would increase power draw, and pull me out of the "sweet spot" which is no-bueno.

I also sold off my T710 as it was a literal power-hungry beast. At idle it was pulling 300w+ which was WAY too much to run it 24/7.

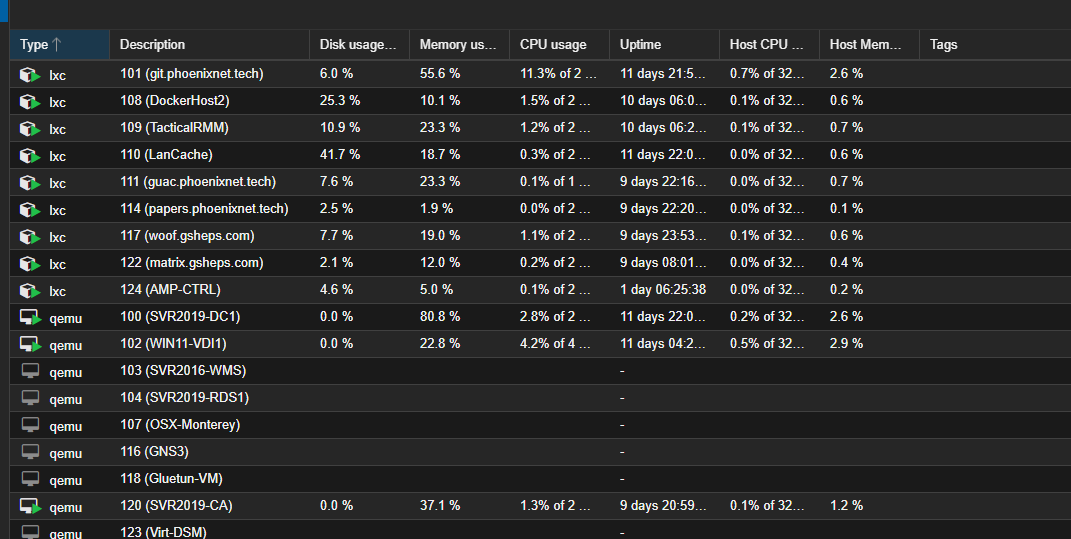

My second Hyve Zeus is configured as below:

4x 16GB DDR3 RDIMMs + 2x 8GB DDR3 RDIMMS

2x Xeon e5-2650v2's (8c/16t, 16c/32t total) @ 2.6, 3.4Ghz Turbo

250GB Intel DC SSD for Proxmox Data

With this addition, I once again feel comfortable with the space I have.

10GB Networking

FINALLY, I can say that I've deployed 10GB in my lab, and be part of the "elite club" of labbers that can say they have 10GB in their lab.

Now, this isn't full-scale 10GB, I honestly don't see the appeal in that, 10GB is good for storage infrastructure and not much more than that for my use-case.

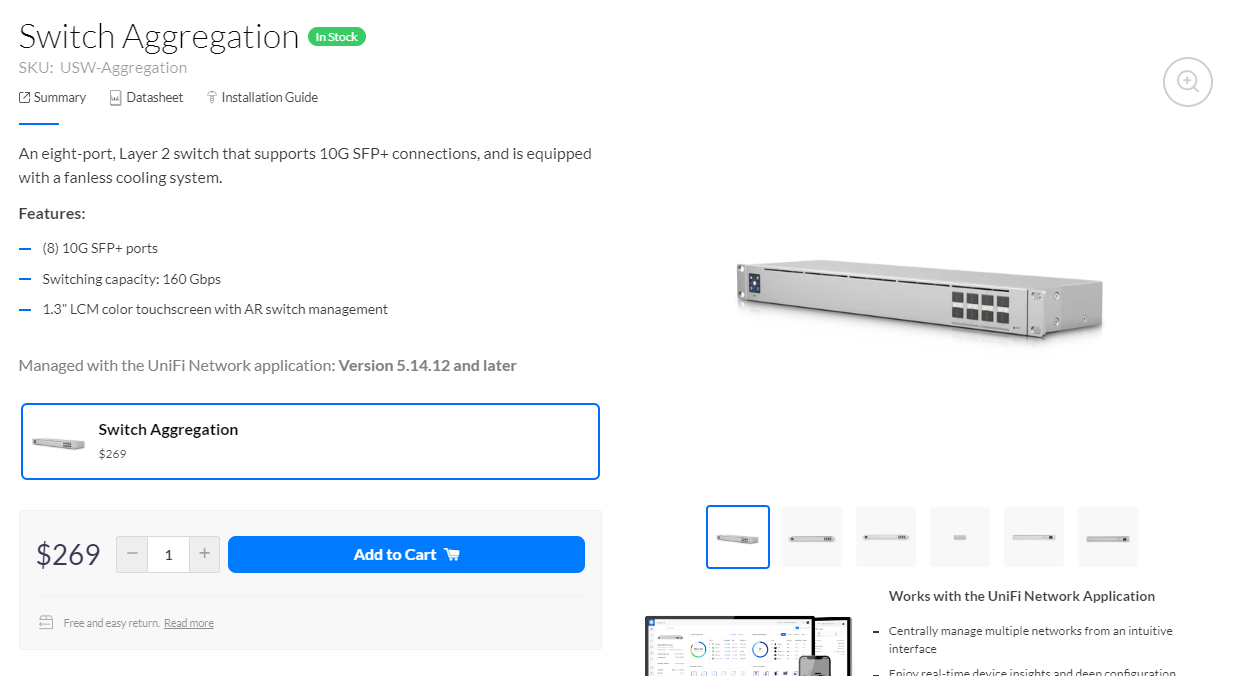

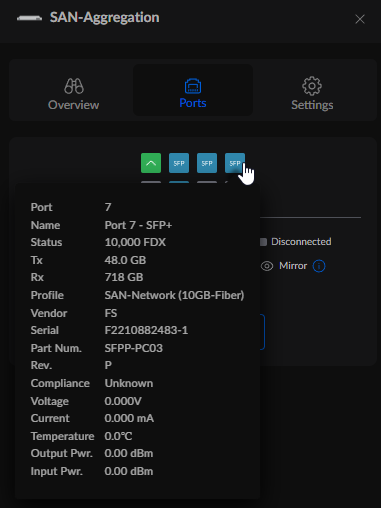

I did ALOT of research trying to find the right switch for the job, I looked at Brocade, Cisco, MikroTik, and a handful of other brands before landing on (you guessed it) the Ubiquiti USW-Aggregation.

The USW-Aggregation is an 8-port SFP+ switch, which gives me plenty of space to grow with 4 hosts, and 1 uplink, giving me 3 ports to do whatever I want with.

The next thing I had to research was how am I going to connect it all together?

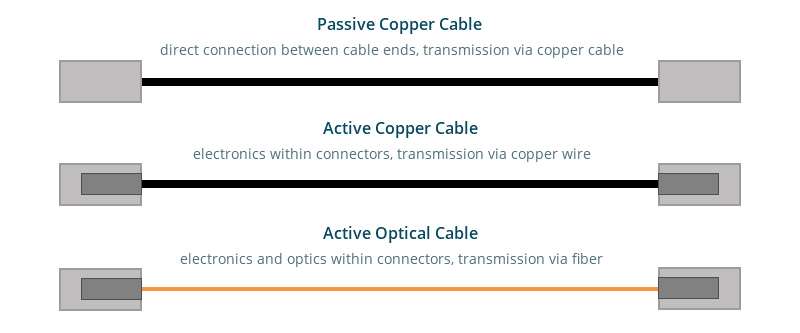

There are so many options out there, I could do fiber to fiber modules, or RJ45-10bT modules and Cat 6 cables, or the more "cost effective" solution, use DAC cables.

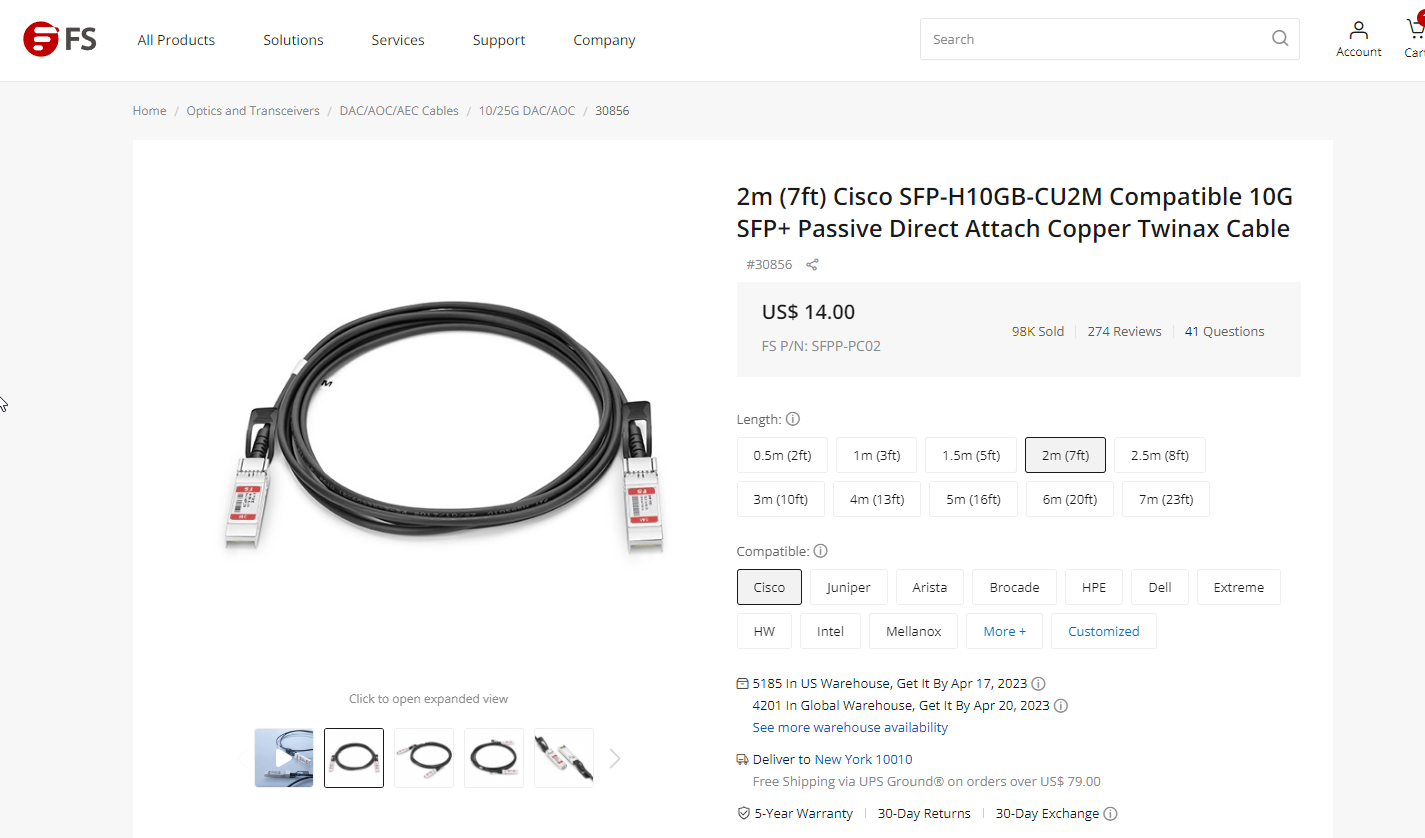

I did some additional research on manufacturers, costs, and landed on FS.com Cisco-programmed DACs. On Ubiquiti's compatibility chart, they listed that Cisco modules work on their equipment out of the box, and FS.com's pricing was rather competitive, not to mention their sales associate was a pleasure to work with.

But wait, that's not all! Now you need NICs! You can't just plug DACs into regular 'ol ethernet ports and expect it to magically work, right?

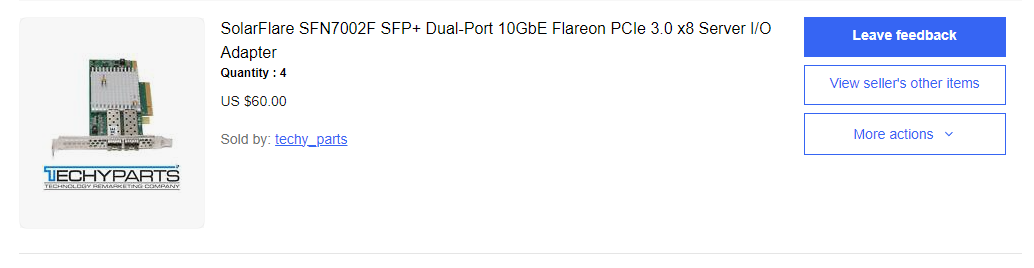

I was pretty tired of doing research at this point, so I just asked around to see what was the most "price effective" 10GB SFP+ NIC on the used market, and SolarFlare kept coming up, so I did my thing and lowballed some eBay seller and got 4 NICs for $15/ea, and as a bonus, they were dual-NICS!

After waiting what felt like forever, I got everything in the mail, and got to work implementing the upgrade!

Everyone got their NICs installed, I dropped in the switch, and connected it all together, and voila! Not quite...

The next hurdle was: How am I going distribute the VM filesystem across 4 hosts?

I'm a sucker for not changing too much, so I went with NFS for the logic, but I was also short on storage.

After buying a datacenter's worth of Intel DC SSDs and those darn HPE 2.5" caddies off eBay, and loading them into the HPE ML30G9, I was able to get that checked off the list.

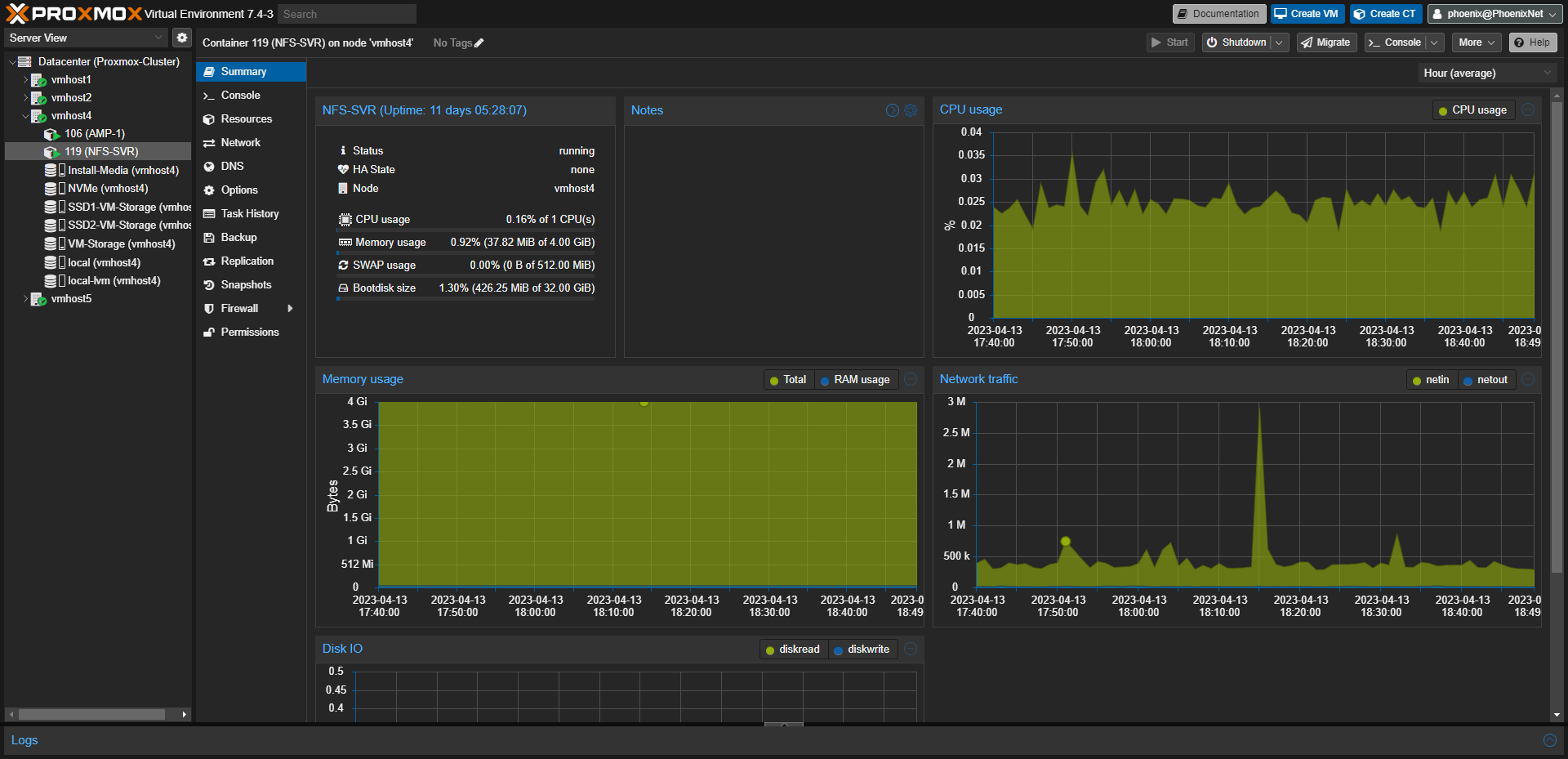

Then, I just created the ZFS volumes in Proxmox, and passed them through to an LXC running an NFS server, configured to serve storage over the 10GB NIC.

Finally I was able to say, that I was finished. I migrated my VMs and presto-magic 10GB, Solid-state VM storage in the lab! Exciting!

New projects

Now that I've got room to grow, I thought that I'd share some projects that I'm working on now, but aren't finished "enough" to share with you all yet.

I'm working on a handful of things, but I'll just toss them into a list:

- Matrix Server (Chat, Think Discord, but Decentralized)

- CubeCoders AMP Clustering (Vertically Scaling AMP to serve multiple game servers!)

- AD-CA (Certificate Services, Cryptography, etc.)

- Power (Those batteries are maxed out again! And we're majorly overdue for a dedicated circuit for the lab.)

- Home Security (More Cameras! NVR!)

- And a few other surprises!

My workflow is pretty strange, I tend to come up with projects on the fly, and develop them until I determine if either:

A) I want to keep them, and finish them.

or

B) I hate it, trash the VM, and move on.

There is no in-between, except for hardware projects, which have a planning, purchasing and deployment phase.

It costs money to do physical projects, so those get more precise strategizing and care, but VMs are temporary, if I don't like it, see ya!

That's it for now,

Until next time!